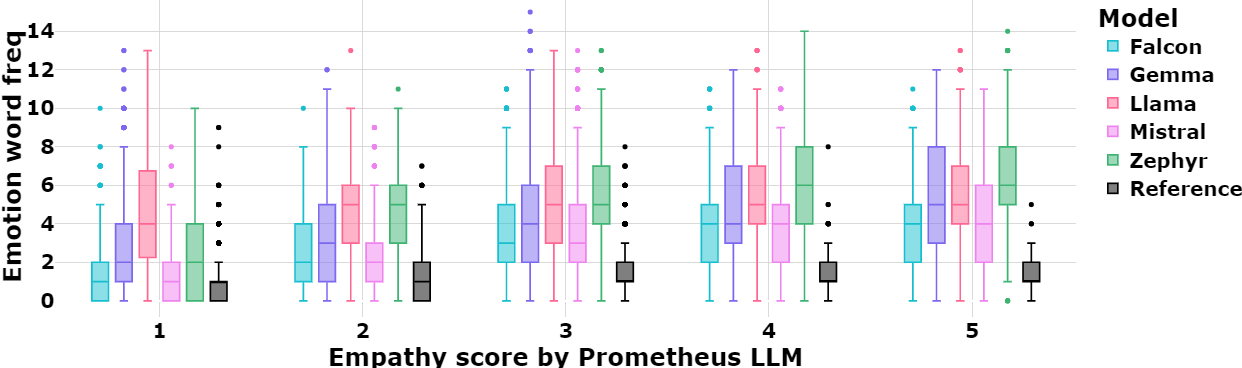

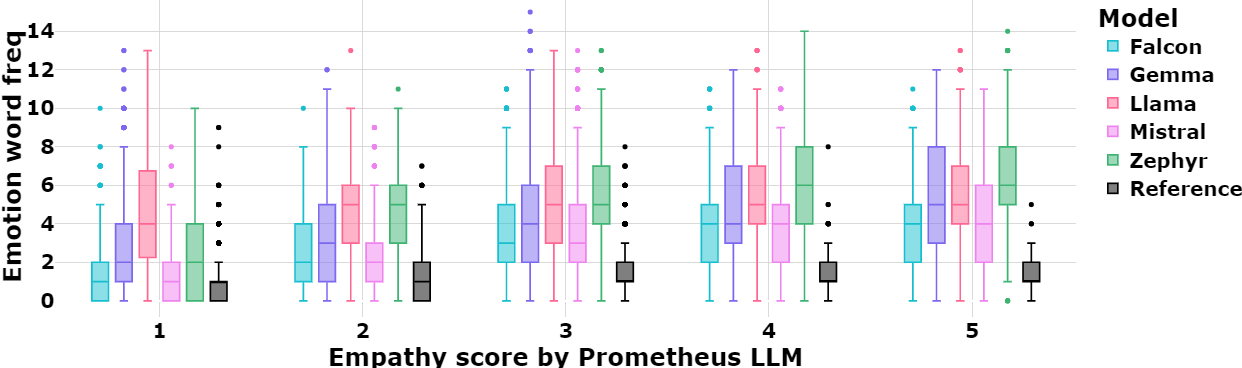

Large Language Models (LLMs) have demonstrated capabilities beyond basic text generation, like question answering, translation, and even stylistic text generation. Since these models are available for public use, enormous effort has been put into safety engineering to ensure that undesirable and harmful text is not generated and the generations are polite and empathetic. In this work, we examine the inherent empathy capabilities of five open-source LLMs and evaluate them from multiple angles using automated metrics to understand their capabilities and limitations. In the context of this work, “inherent” refers to the LLM’s ability to generate empathetic text without having to explicitly prompt for it. We examine if empathy is treated as a style change or is the model demonstrating some understanding of the specific user’s context. We find that LLMs use more emotion words than humans in their generations. They can also infer the user’s emotional state, a crucial characteristic of empathy. Due to the probabilistic nature of obtaining generations, there is a tendency for the responses to drift away from the user’s actual intent. In such cases, specific prompting allows the model to respond appropriately. We summarize the differences observed between human and LLM generations and conclude with a potential research direction for empathetic dialog generation that leverages the capabilities of LLMs.

@inproceedings{maheswaran_probing_2025,

title = {Probing the {Inherent} {Ability} of {Large} {Language} {Models} for {Generating} {Empathetic} {Responses}},

url = {https://ieeexplore.ieee.org/document/11081499},

doi = {10.1109/SDS66131.2025.00012},

abstract = {Large Language Models (LLMs) have demonstrated capabilities beyond basic text generation, like question answering, translation, and even stylistic text generation. Since these models are available for public use, enormous effort has been put into safety engineering to ensure that undesirable and harmful text is not generated and the generations are polite and empathetic. In this work, we examine the inherent empathy capabilities of five open-source LLMs and evaluate them from multiple angles using automated metrics to understand their capabilities and limitations. In the context of this work, “inherent” refers to the LLM's ability to generate empathetic text without having to explicitly prompt for it. We examine if empathy is treated as a style change or is the model demonstrating some understanding of the specific user's context. We find that LLMs use more emotion words than humans in their generations. They can also infer the user's emotional state, a crucial characteristic of empathy. Due to the probabilistic nature of obtaining generations, there is a tendency for the responses to drift away from the user's actual intent. In such cases, specific prompting allows the model to respond appropriately. We summarize the differences observed between human and LLM generations and conclude with a potential research direction for empathetic dialog generation that leverages the capabilities of LLMs.},

urldate = {2025-07-26},

booktitle = {2025 {IEEE} {Swiss} {Conference} on {Data} {Science} ({SDS})},

author = {Maheswaran, Aishwarya and Chua, Caslon and Desarkar, Maunendra Sankar},

month = jun,

year = {2025},

note = {ISSN: 2835-3420},

keywords = {Conversational AI, Data science, Emotion recognition, Generative AI, Large language models, Measurement, Probabilistic logic, Question answering (information retrieval), Safety, Training, Translation},

pages = {32--39},

}